Presentation

Scaling Infrastructure to Support Multi-Trillion Parameter LLM Training

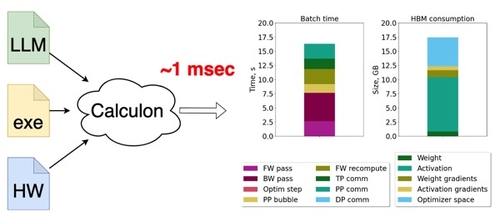

DescriptionThis poster discusses efficient system designs for Large Language Model (LLM) scaling to up to 128 trillion parameters. We use a comprehensive analytical performance model to analyze how such models could be trained on current systems while maintaining 75% Model FLOPS Utilization (MFU). We first show how tensor offloading alone can be used to dramatically increase the size of trainable LLMs. We analyze performance bottlenecks when scaling on systems up to 16,384 GPUs and with models up to 128T parameters. Our findings suggest that current H100 GPUs with 80 GiB of HBM enabled with 512 GiB of tensor offloading capacity allows scaling to 11T-parameter LLMs; and getting to 128T parameters requires 120 GiB of HBM and 2 TiB of offloading memory, yielding 75%+ MFU, which is uncommon even when training much smaller LLMs today.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view