Posters Gallery

Tuesday, 14 November 2023 10am-5pm E Concourse

Hide Details

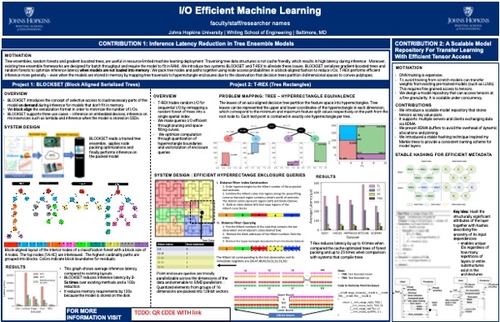

I/O Efficient Machine Learning

DescriptionMy research focuses on systems optimizations for machine learning, specifically on I/O efficient model storage and retrieval.

The first part of my work focuses on efficient inference serving of tree ensemble models. Tree structures are inherently not cache friendly and their traversal incurs random I/Os. We developed two systems - Blockset (Block Aligned Serialized Trees) and T-REX (Tree Rectangles).

Blockset improves inference latency in the scenario where the model doesn’t fit in memory. It introduces the concept of selective access for tree ensembles in which only the parts of the model needed for inference are deserialized and loaded into memory. It uses principles from external memory algorithms to rearrange tree nodes in a block aligned format to minimize the number of I/Os needed for inference. T-REX optimizes inference latency for both in-memory inference as well as inference when the model doesn’t fit in memory. T-REX reformulates decision tree traversal as hyperrectangle enclosure queries using the fact that decision trees partition the space into convex hyperrectangles. The test points are then queried for enclosure inside the hyperrectangles. In doing random I/O is traded for additional computation.

The second part of my work focuses on efficient deep learning model storage. We implemented a deep learning model repository that requires fine-grained access to individual tensors in models. This is useful in applications such as transfer learning, where individual tensors in layers are transferred from one model to another. We’re currently working on caching and prefetching popular tensors based on application level hints.

The first part of my work focuses on efficient inference serving of tree ensemble models. Tree structures are inherently not cache friendly and their traversal incurs random I/Os. We developed two systems - Blockset (Block Aligned Serialized Trees) and T-REX (Tree Rectangles).

Blockset improves inference latency in the scenario where the model doesn’t fit in memory. It introduces the concept of selective access for tree ensembles in which only the parts of the model needed for inference are deserialized and loaded into memory. It uses principles from external memory algorithms to rearrange tree nodes in a block aligned format to minimize the number of I/Os needed for inference. T-REX optimizes inference latency for both in-memory inference as well as inference when the model doesn’t fit in memory. T-REX reformulates decision tree traversal as hyperrectangle enclosure queries using the fact that decision trees partition the space into convex hyperrectangles. The test points are then queried for enclosure inside the hyperrectangles. In doing random I/O is traded for additional computation.

The second part of my work focuses on efficient deep learning model storage. We implemented a deep learning model repository that requires fine-grained access to individual tensors in models. This is useful in applications such as transfer learning, where individual tensors in layers are transferred from one model to another. We’re currently working on caching and prefetching popular tensors based on application level hints.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Artificial Intelligence/Machine Learning

I/O and File Systems

TP

XO/EX

Archive

view

Hide Details

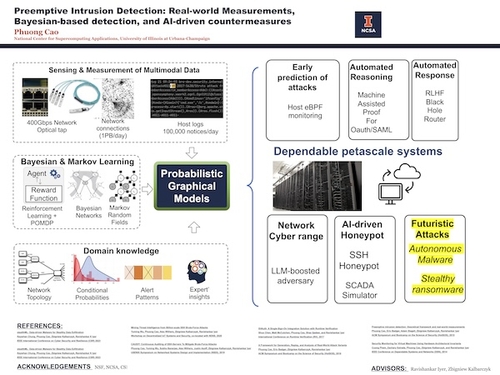

Preemptive Intrusion Detection: Real-World Measurements, Bayesian-Based Detection, and AI-Driven Countermeasures

DescriptionThe problem of preempting attacks before damages remains the top security priority. The gap between alerts and early detection remains wide open because noisy attack attempts and unreliable alerts mask real attacks from humans. This dissertation brings together: 1) attack patterns mining driven by real security incidents, 2) probabilistic graphical models linking patterns with runtime alerts, and 3) an in vivo testbed which embeds a honeypot in a live Science DMZ network for realistic assessment. Traditional techniques that seek specific attack signatures or anomalies are ineffective because defenders only see a partial view of ongoing attacks while having to wrestle with unreliable alerts and heavy background noise of attack attempts. In contrast, our principle objective is to reinforce scant, incomplete evidence of potential attacks with the ground truth of past security incidents. We evaluated our system, Cyborg's, accuracy, and performance in three experiments at the National Center for Supercomputing Applications at the University of Illinois. Our deployment stops 8 out of 10 replayed attacks before system integrity violation and all ten before data exfiltration. In addition, we discovered and stopped a family of ransomware attacks before the data breach. During the period of deployment, this thesis resulted in a honeypot that collected 15 billion attack attempts (the world's largest publicly analyzed dataset) for analytics. In the future, we are looking at integrating AI techniques such as large language models to build intelligent honeypot systems that are indistinguishable from real systems to collect attack intelligence and educate the security operator.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Artificial Intelligence/Machine Learning

Security

TP

XO/EX

Archive

view

Hide Details

High Performance Serverless for HPC and Clouds

DescriptionFunction-as-a-Service (FaaS) computing brought a fundamental shift in resource management. It allowed for new and better solutions to the problem of low resource utilization, an issue that has been known in data centers for decades. The problem persists as the frequently changing resource availability cannot be addressed entirely with techniques such as persistent cloud allocations and batch jobs. The elastic fine-grained tasking and largely unconstrained scheduling of FaaS create new opportunities. Still, modern serverless platforms struggle to achieve the high performance needed for the most demanding and latency-critical workloads. Furthermore, many applications cannot be “FaaSified” without non-negligible loss in performance, and the short and stateless functions employed in FaaS must be easy to program, debug, and optimize. By solving the fundamental performance challenges of FaaS, we can build a fast and efficient programming model that brings innovative cloud techniques into HPC data centers, allowing users to benefit from pay-as-you-go billing and helping operators to decrease running costs and their environmental impact. My PhD research attempts to bridge the gap between high-performance programming and modern FaaS computing frameworks. I have been working on tailored solutions for different levels of the FaaS computing stack: from computing and network devices to high-level optimizations and efficient system designs.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Cloud Computing

TP

XO/EX

Archive

view

Hide Details

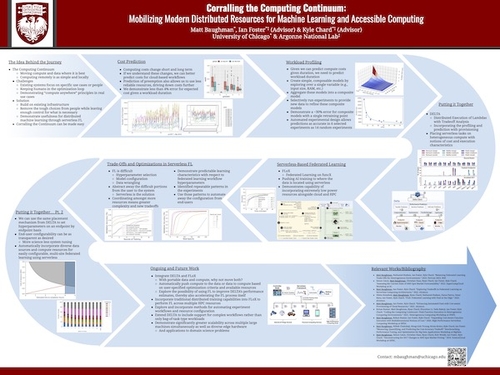

Corralling the Computing Continuum: Mobilizing Modern Distributed Resources for Machine Learning and Accessible Computing

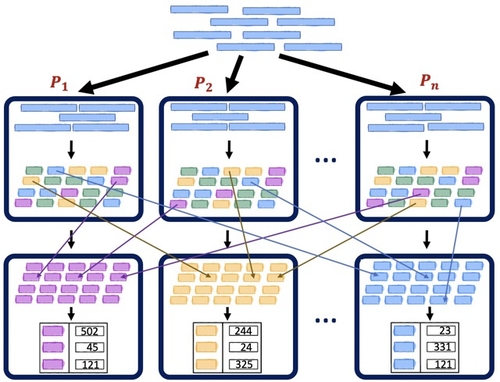

DescriptionTo achieve the resource agnostic flexibility of compute described by the computing continuum, we combined our work in workload profiling and cost estimation with task provisioning to present DELTA–a framework for serverless workload placement across a computing ecosystem. To address the dynamic availability of modern computing resources as well as the multiple costs involved in computing, we presented extensions of our framework as DELTA+ which demonstrated the ability for resource provisioning and multidimensional compute costs.

To bring this idea of resource abstraction via serverless into the rapidly growing field of federated learning, we developed and released FLoX: Federated Learning on funcX. This framework was built from the ground up around a serverless computing paradigm with experimentation and usability in mind. Extending the lessons learned from DELTA around self-adaptive systems, we began exploring the potential of automating tradeoffs found in FLoX and federated learning in general.

Looking ahead, we are developing FLoX into a much more robust framework to enable the use of a wide range of computing resources while abstracting away the difficulties of configuring and optimizing a federated learning experiment. Additionally, we are actively working on a re-release of DELTA with all extensions combined into one framework with updated cost and execution time predictors and complete resource provisioning ability. Finally, we are designing an integration between FLoX and DELTA that will enable serverless-based FL to automatically place each component of an FL flow and move data as necessary to best use the available resources.

To bring this idea of resource abstraction via serverless into the rapidly growing field of federated learning, we developed and released FLoX: Federated Learning on funcX. This framework was built from the ground up around a serverless computing paradigm with experimentation and usability in mind. Extending the lessons learned from DELTA around self-adaptive systems, we began exploring the potential of automating tradeoffs found in FLoX and federated learning in general.

Looking ahead, we are developing FLoX into a much more robust framework to enable the use of a wide range of computing resources while abstracting away the difficulties of configuring and optimizing a federated learning experiment. Additionally, we are actively working on a re-release of DELTA with all extensions combined into one framework with updated cost and execution time predictors and complete resource provisioning ability. Finally, we are designing an integration between FLoX and DELTA that will enable serverless-based FL to automatically place each component of an FL flow and move data as necessary to best use the available resources.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Cloud Computing

Distributed Computing

TP

XO/EX

Archive

view

Hide Details

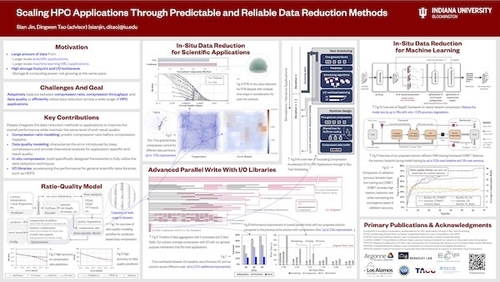

Scaling HPC Applications through Predictable and Reliable Data Reduction Methods

DescriptionFor scientists and engineers, large-scale computer systems are one of the most powerful tools to solve complex high-performance computing (HPC) and Deep Learning (DL) problems. With the ever-increasing computing power such as the new generation of exascale (one exaflop or a billion billion calculations per second) supercomputers, the gap between computing power and limited storage capacity and I/O bandwidth has become a major challenge for scientists and engineers. Large-scale scientific simulations on parallel computers can generate extremely large amounts of data that are highly compute and storage intensive. This study will introduce data reduction techniques as a promising solution to significantly reduce the data sizes while maintaining high data fidelity for post-analyses in HPC applications. This study can be categorized into mainly four scenarios: (1) A ratio-quality model that makes lossy compression predictable; (2) advanced parallel write solution with async-I/O; (3) in-situ data reduction for scientific applications; and (4) in-situ data reduction for large-scale machine learning.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Data Compression

I/O and File Systems

TP

XO/EX

Archive

view

Hide Details

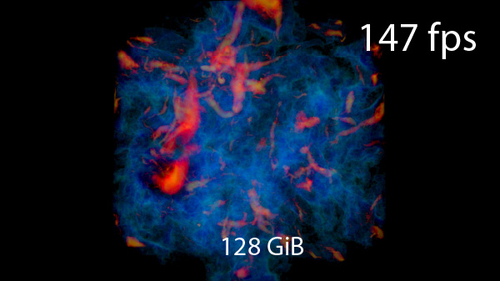

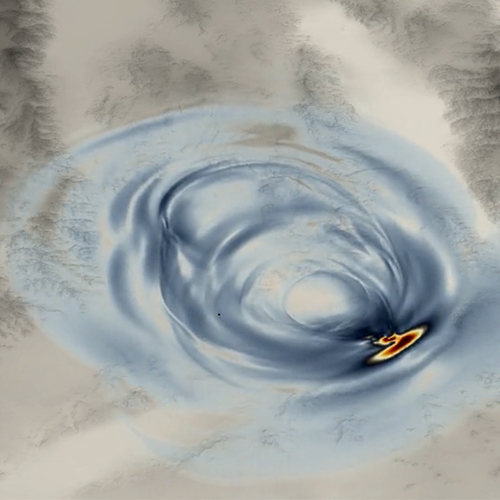

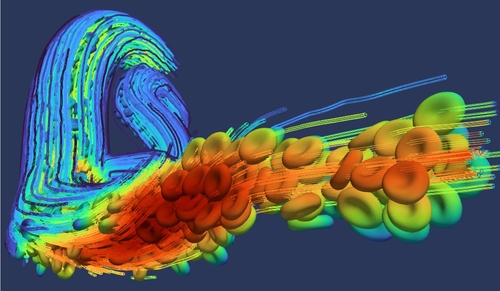

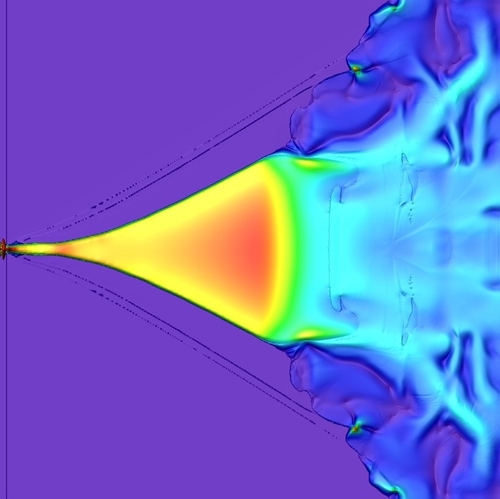

Interactive In-Situ Visualization of Large Distributed Volume Data

DescriptionLarge distributed volume data are routinely produced in numerical simulations and experiments. In-situ visualization, the visualization of simulation or experiment data as it is generated, enables simulation steering and experiment control, which helps scientists gain an intuitive understanding of the studied phenomena. Such data exploration requires interactive visualization with smooth viewpoint changes and zooming to convey depth perception and spatial understanding. As data sizes increase, this becomes increasingly challenging.

This thesis presents an end-to-end solution for interactive in-situ visualization on distributed computers based on novel extensions to the Volumetric Depth Image (VDI) representation. VDIs are view-dependent, compact representations of volume data that can be rendered faster than the original data.

We propose the first algorithm to generate VDIs on distributed 3D data, using sort-last parallel compositing to scale to large data sizes. Scalability is achieved by a novel compact in-memory representation of VDIs that exploits sparsity and optimizes performance. We also propose a low-latency architecture for sharing data and hardware resources with a running simulation. The resulting VDI is streamed for remote interactive visualization.

We provide a novel raycasting algorithm for rendering streamed VDIs, significantly outperforming existing solutions. We exploit properties of perspective projection to minimize calculations in the GPU kernel and leverage spatial smoothness in the data to minimize memory accesses.

The quality and performance of the approach are evaluated on multiple datasets, showing that the approach outperforms state-of-the-art techniques for visualizing large distributed volume data. The contributions are implemented as extensions to established open-source tools.

This thesis presents an end-to-end solution for interactive in-situ visualization on distributed computers based on novel extensions to the Volumetric Depth Image (VDI) representation. VDIs are view-dependent, compact representations of volume data that can be rendered faster than the original data.

We propose the first algorithm to generate VDIs on distributed 3D data, using sort-last parallel compositing to scale to large data sizes. Scalability is achieved by a novel compact in-memory representation of VDIs that exploits sparsity and optimizes performance. We also propose a low-latency architecture for sharing data and hardware resources with a running simulation. The resulting VDI is streamed for remote interactive visualization.

We provide a novel raycasting algorithm for rendering streamed VDIs, significantly outperforming existing solutions. We exploit properties of perspective projection to minimize calculations in the GPU kernel and leverage spatial smoothness in the data to minimize memory accesses.

The quality and performance of the approach are evaluated on multiple datasets, showing that the approach outperforms state-of-the-art techniques for visualizing large distributed volume data. The contributions are implemented as extensions to established open-source tools.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Data Analysis, Visualization, and Storage

TP

XO/EX

Archive

view

Hide Details

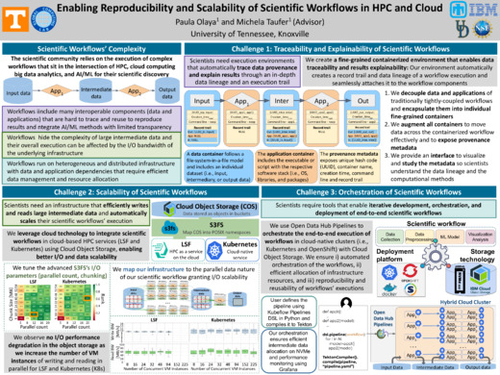

Enabling Reproducibility and Scalability of Scientific Workflows in HPC and Cloud

DescriptionScientific communities across fields like earth science, biology, and materials science increasingly run complex workflows for their scientific discovery. We work closely with these communities to leverage high-performance computing (HPC), big data analytics, and artificial intelligence/machine learning (AI/ML) to increase and accelerate their workflows’ productivity. Our work addresses the new challenges brought about by this optimization process.

We identify three main challenges in these workflows: i) they integrate AI/ML methods with limited transparency and include many interoperable components (data and applications) that are hard to trace and reuse to reproduce results; ii) they hide the complexity of large intermediate data and their overall execution can be affected by the I/O bandwidth of the underlying infrastructure; and iii) they run on heterogeneous and distributed infrastructure with data and application dependencies that require efficient data management and resource allocation.

To address these challenges, we provide solutions that leverage the convergence between high-performance and cloud computing. First, we design and develop fine-grained containerized environments that enable data traceability and results explainability by automatically annotating and seamlessly attaching provenance information. Second, since the workflows are already containerized, we integrate them in HPC and native-cloud infrastructure and tune the storage technology to enable better I/O and data scalability. Finally, we orchestrate the end-to-end execution of workflows, ensuring efficient allocation of infrastructure resources and intermediate data management, and supporting reproducibility and reusability of workflows’ executions.

We identify three main challenges in these workflows: i) they integrate AI/ML methods with limited transparency and include many interoperable components (data and applications) that are hard to trace and reuse to reproduce results; ii) they hide the complexity of large intermediate data and their overall execution can be affected by the I/O bandwidth of the underlying infrastructure; and iii) they run on heterogeneous and distributed infrastructure with data and application dependencies that require efficient data management and resource allocation.

To address these challenges, we provide solutions that leverage the convergence between high-performance and cloud computing. First, we design and develop fine-grained containerized environments that enable data traceability and results explainability by automatically annotating and seamlessly attaching provenance information. Second, since the workflows are already containerized, we integrate them in HPC and native-cloud infrastructure and tune the storage technology to enable better I/O and data scalability. Finally, we orchestrate the end-to-end execution of workflows, ensuring efficient allocation of infrastructure resources and intermediate data management, and supporting reproducibility and reusability of workflows’ executions.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Reproducibility

TP

XO/EX

Archive

view

Hide Details

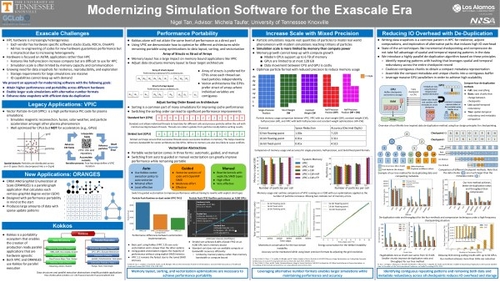

Modernizing Simulation Software for the Exascale Era

DescriptionModern HPC hardware is becoming increasingly heterogeneous and diverse in the exascale era. The diversity of hardware and software stacks adds additional development challenges to high performance simulations. One common development approach is to re-engineer the code for each new target architecture in order to maximize performance. However, this re-engineering effort is no longer practical due to increasing heterogeneous hardware. Adding support for a single family of GPUs alone poses a significant challenge. Supporting each major vendor's hardware and software stacks takes valuable developer time away from optimizing and enhancing simulation capabilities. Moving forward, the community must modernize the code development process in order to achieve the greatest scientific output.

In this work, we examine the challenges posed by emerging heterogeneous hardware. These challenges include developing performance portable code, leveraging hardware features targeting AI/ML for HPC applications, and difficulties managing limited I/O resources while checkpointing. To address these challenges we present a modernization approach for scientific software that ensures the following. Attain high performance and portability across architectures using the Kokkos portability framework in addition to optimizations to memory layout, sorting algorithms, and vectorization. Leverage alternative number formats such as half-precision and fixed-point to maximize usage of the limited memory on GPUs and enable larger simulations. Reduce IO overhead and storage requirements through the identification and elimination of spatial-temporal redundancy in application data.

In this work, we examine the challenges posed by emerging heterogeneous hardware. These challenges include developing performance portable code, leveraging hardware features targeting AI/ML for HPC applications, and difficulties managing limited I/O resources while checkpointing. To address these challenges we present a modernization approach for scientific software that ensures the following. Attain high performance and portability across architectures using the Kokkos portability framework in addition to optimizations to memory layout, sorting algorithms, and vectorization. Leverage alternative number formats such as half-precision and fixed-point to maximize usage of the limited memory on GPUs and enable larger simulations. Reduce IO overhead and storage requirements through the identification and elimination of spatial-temporal redundancy in application data.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Heterogeneous Computing

Software Engineering

TP

XO/EX

Archive

view

Hide Details

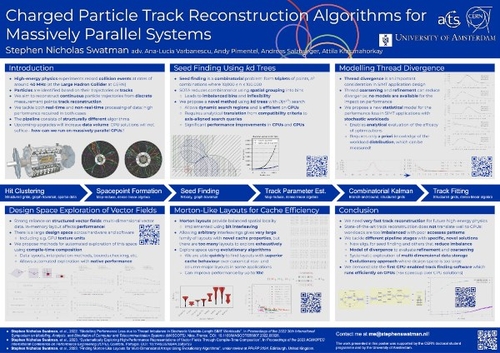

Charged Particle Track Reconstruction Algorithms for Massively Parallel Systems

DescriptionThe reconstruction of the trajectories of charged particles through detector experiments is a core computational task in the domain of high-energy physics. Upcoming upgrades to accelerators such as the Large Hadron Collider as well as to experiments like ATLAS threaten to render existing CPU-based approaches to track reconstruction insufficient, and the use of massively parallel systems - GPGPUs in particular - is an important opportunity to meet future data processing requirements. In my thesis, I investigate the feasibility of GPGPU-based track reconstruction from performance engineering perspective: I focus on structured analysis of application performance, the development of statistical and analytical models of performance, methods for mitigating the challenges of GPGPU programming, and the design and implementation of novel track reconstruction algorithms. The key contributions of my thesis include the development of novel algorithms for hit clustering, seed finding, and combinatorial Kalman filtering, key parts of the track reconstruction process. These algorithms suffer from significant load imbalance and thread divergence, and I have developed a novel statistical method for estimating the performance effects of this, as well as to guide optimization through thread refinement and coarsening. I have developed a method for the automated design space exploration of data storage methods for magnetic fields, which play a crucial role in track reconstruction. Furthermore, I have developed an evolutionary method for finding layouts for multi-dimensional arrays in hierarchical memory systems. My thesis will be concluded by a comprehensive study of the performance of track reconstruction, as guided by the aforementioned research.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Accelerators

Applications

TP

XO/EX

Archive

view

Hide Details

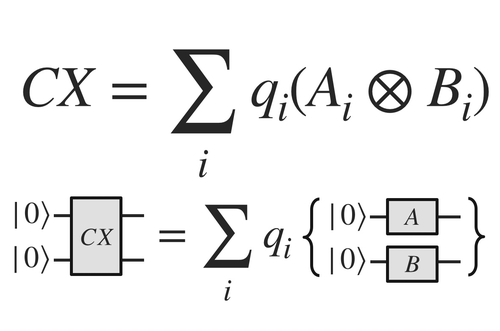

Design Automation Tools and Software for Quantum Computing

DescriptionQuantum computing promises to solve problems beyond the reach of today’s machines, but it requires efficient and reliable software tools to realize its potential. This poster gives an overview of various contributions towards design automation methods and software for quantum computing that leverage existing knowledge and expertise in classical circuit and system design. It focuses on three major tasks: simulation, compilation, and verification of quantum circuits. The proposed solutions demonstrate significant improvements in efficiency, scalability, and reliability for all tasks and constitute the backbone of the Munich Quantum Toolkit (MQT), a collection of open-source tools for quantum computing. The respective solutions advance the state of the art in quantum computing and illustrate the benefits of design automation methods for this emerging field.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Quantum Computing

TP

XO/EX

Archive

view

Hide Details

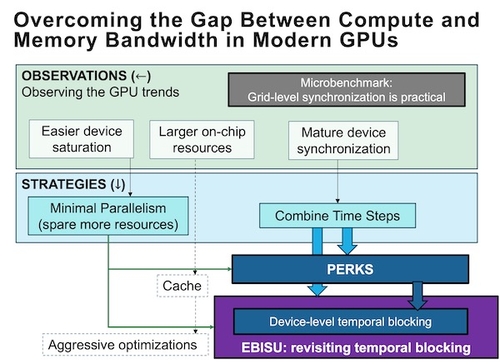

Overcoming the Gap between Compute and Memory Bandwidth in Modern GPUs

DescriptionThe imbalance between compute and memory bandwidth has been a long-standing issue. Despite efforts to address it, the gap between them is still widening. This has led to the categorization of many applications as memory-bound kernels.

This dissertation centers on memory-bound kernels, with a particular emphasis on Graphics Processing Units (GPUs), given their rising prevalence in High-Performance Computing (HPC) systems.

In this dissertation, we initially focus on the evolution trend of GPU development in the last decades. Examples include cooperative groups (i.e., device-wide barriers), asynchronous copy of shared memory (i.e., hardware prefetching), low(er) latency of operations, and larger volume of on-chip resources (register files and L1 cache).

This dissertation seeks to utilize the latest GPU features to optimize memory-bound kernels. Specifically, we propose extending the kernel's lifetime across the time steps and taking advantage of the large volume of on-chip resources (i.e., register files and scratchpad memory) in reducing or eliminating traffic to the device memory. Furthermore, we champion a minimum level of parallelism to maximize the available on-chip resources.

Based on the strategies, we propose a general execution model for running memory-bound iterative GPU kernels: PERsistent KernelS (PERKS) and a novel temporal blocking method, EBISU. Evaluations have shown outstanding performance in the latest GPU architectures compared with counterpart state-of-the-art implementations.

This dissertation centers on memory-bound kernels, with a particular emphasis on Graphics Processing Units (GPUs), given their rising prevalence in High-Performance Computing (HPC) systems.

In this dissertation, we initially focus on the evolution trend of GPU development in the last decades. Examples include cooperative groups (i.e., device-wide barriers), asynchronous copy of shared memory (i.e., hardware prefetching), low(er) latency of operations, and larger volume of on-chip resources (register files and L1 cache).

This dissertation seeks to utilize the latest GPU features to optimize memory-bound kernels. Specifically, we propose extending the kernel's lifetime across the time steps and taking advantage of the large volume of on-chip resources (i.e., register files and scratchpad memory) in reducing or eliminating traffic to the device memory. Furthermore, we champion a minimum level of parallelism to maximize the available on-chip resources.

Based on the strategies, we propose a general execution model for running memory-bound iterative GPU kernels: PERsistent KernelS (PERKS) and a novel temporal blocking method, EBISU. Evaluations have shown outstanding performance in the latest GPU architectures compared with counterpart state-of-the-art implementations.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Accelerators

TP

XO/EX

Archive

view

Hide Details

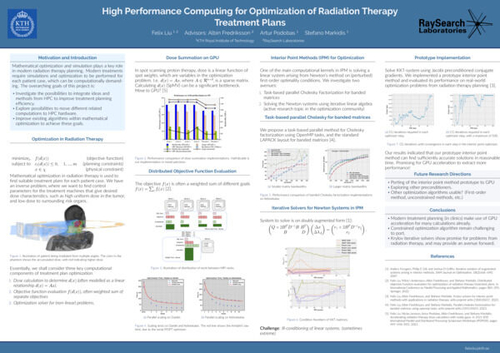

High Performance Computing for Optimization of Radiation Therapy Treatment Plans

DescriptionModern radiation therapy relies heavily on computational methods to design optimal treatment plans (control parameters for the treatment machine) for individual patients. These parameters are determined by constructing and solving a mathematical optimization problem. Ultimately, the goal is to create treatment plans for each patient such that a high dose is delivered to the tumor, while sparing surrounding healthy tissue as much as possible. Solving the optimization problem can be computationally expensive, as it requires both a method to compute the delivered dose in the patient and an algorithm to solve a (in general) constrained and nonlinear optimization problem.

The goal of this thesis project has been to investigate the use of HPC hardware and methods to accelerate the computational workflow in radiation therapy treatment planning. First, we propose two methods to bring the optimization to HPC hardware using GPU acceleration and distributed computing for dose summation and objective function calculation respectively. We show that our methods achieve competitive performance compared to state-of-the-art libraries and scale well, up to the Amdahl’s law limit.

Then, we investigate methods to accelerate interior point methods, a popular algorithm for constrained optimization. We investigate the use of iterative Krylov subspace linear solvers to solve Newton systems from interior point methods and show that we can compute solutions in reasonable time for our problems, in spite of extreme ill-conditioning. This approach presents one avenue by which constrained optimization solvers for radiation therapy could be ported to GPU accelerators.

The goal of this thesis project has been to investigate the use of HPC hardware and methods to accelerate the computational workflow in radiation therapy treatment planning. First, we propose two methods to bring the optimization to HPC hardware using GPU acceleration and distributed computing for dose summation and objective function calculation respectively. We show that our methods achieve competitive performance compared to state-of-the-art libraries and scale well, up to the Amdahl’s law limit.

Then, we investigate methods to accelerate interior point methods, a popular algorithm for constrained optimization. We investigate the use of iterative Krylov subspace linear solvers to solve Newton systems from interior point methods and show that we can compute solutions in reasonable time for our problems, in spite of extreme ill-conditioning. This approach presents one avenue by which constrained optimization solvers for radiation therapy could be ported to GPU accelerators.

Event Type

Doctoral Showcase

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationE Concourse

Applications

TP

XO/EX

Archive

view

Tuesday, 14 November 2023 10am-5pm D Concourse

Hide Details

Conversing Faults: The 2019 Ridgecrest Earthquake

DescriptionThe 2019 Ridgecrest earthquakes occurred in a complex system of fault lines in the Mojave desert. Separated by 34 hours, the earthquakes were caused by ruptures in separate but nearby faults. In this study of the geophysical processes underlying these events the surface, known faults and the volumetric subsurface are modeled on HPC systems. Visualization techniques are used to analyze the simulation results in their three-dimensional context.

Event Type

Posters

Scientific Visualization & Data Analytics Showcase

TimeTuesday, 14 November 202310am - 5pm MST

LocationD Concourse

Data Analysis, Visualization, and Storage

HPC in Society

Modeling and Simulation

Visualization

TP

XO/EX

Archive

view

LinksVisualization

Hide Details

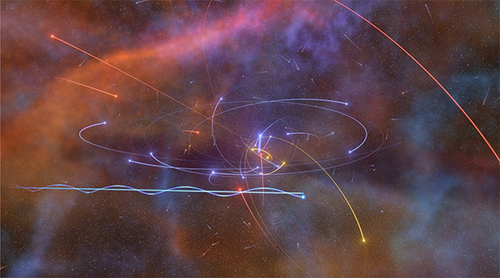

A Journey to the Center of the Milky Way: Stellar Orbits around Its Central Black Hole

DescriptionThe Advanced Visualization Lab at the NCSA created a cinematic scientific visualization showing a flight through the Milky Way galaxy, to the galactic center where stars are orbiting around a supermassive black hole. The tour summarizes results from Andrea Ghez's Galactic Center Group: their study of the motions of stars around the Milky Way's central black hole reveals a rich and surprising environment, with hot young stars (coded as purple) where few were expected to be, many orbiting in a common plane; a paucity of cooler old stars (yellow); a population of unexpected "G-object" dusty stars (red); and an eclipsing binary star (teal). The black hole itself, shrouded in mystery, is seen only as a tiny faint twinkling radio source. But the movement of these nearby stars, especially the S0-2 "hero" (pale blue ellipse), probe the black hole's gravity, exposing its massive presence.

Event Type

Posters

Scientific Visualization & Data Analytics Showcase

TimeTuesday, 14 November 202310am - 5pm MST

LocationD Concourse

Data Analysis, Visualization, and Storage

Modeling and Simulation

Visualization

TP

XO/EX

Archive

view

LinksVisualization

Hide Details

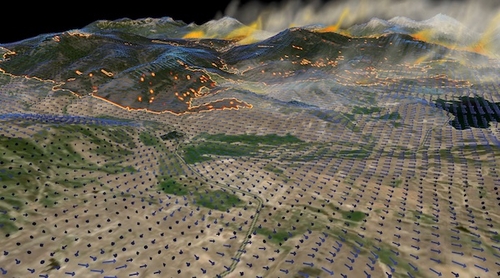

Visualizing Megafires: How AI Can Be Used to Drive Wildfire Simulations with Better Predictive Skill

DescriptionThe East Troublesome Wildfire was the fourth largest wildfire to date in Colorado history, igniting on October 14, 2020. Driven by low humidity and high winds, the wildfire spread to over 200,000 acres in nine days, with 87,000 of those acres being burnt in a single 24 hour period. Wildfire simulations and forecasts help decision-makers issue evacuation orders and inform response teams, but these simulations depend on accurate variable inputs to produce trustworthy results. These wildfire visualizations demonstrate new AI tools developed at the National Center for Atmospheric Research (NCAR), which are producing superior wildfire simulation outputs than have been available in the past.

Event Type

Posters

Scientific Visualization & Data Analytics Showcase

TimeTuesday, 14 November 202310am - 5pm MST

LocationD Concourse

Data Analysis, Visualization, and Storage

HPC in Society

Modeling and Simulation

Visualization

TP

XO/EX

Archive

view

LinksVisualization

Hide Details

ExaWind at NREL: Upping the Ante

DescriptionThe objective of the ExaWind component of the Exascale Computing Project is to deliver many-turbine blade-resolved simulations in complex terrain. These simulations bring new challenges to both compute and analysis of the resulting data. In this paper/video, we visually explore the impact of ExaWind on wind simulations through two studies of a small wind farm under two atmospheric conditions. We then turn to analysis and review tools that visualization researchers at NREL use to answer the challenges that ExaWind brings.

Event Type

Posters

Scientific Visualization & Data Analytics Showcase

TimeTuesday, 14 November 202310am - 5pm MST

LocationD Concourse

Data Analysis, Visualization, and Storage

Exascale

HPC in Society

Modeling and Simulation

Visualization

TP

XO/EX

Archive

view

LinksVisualization

Hide Details

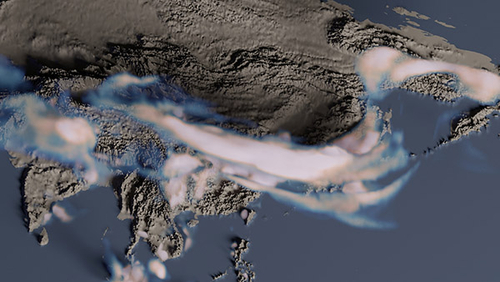

Visualizing the Impact of the Asian Summer Monsoon on the Composition of the Upper Troposphere and Lower Stratosphere

DescriptionWe present an explanatory-track visualization which utilizes multiple open-source graphics tools, including the C++ library OpenVDB and the 3D animation software Blender, to create a cinematic representation of simulation data generated in support of the Asian Summer Monsoon Chemical and Climate Impact Project (ACCLIP) campaign. After a brief summary of the project and data simulation, the process and techniques used to create the visualization are explained in detail.

Event Type

Posters

Scientific Visualization & Data Analytics Showcase

TimeTuesday, 14 November 202310am - 5pm MST

LocationD Concourse

Data Analysis, Visualization, and Storage

HPC in Society

Modeling and Simulation

Visualization

TP

XO/EX

Archive

view

LinksVisualization

Tuesday, 14 November 2023 10am-5pm DEF Concourse

Hide Details

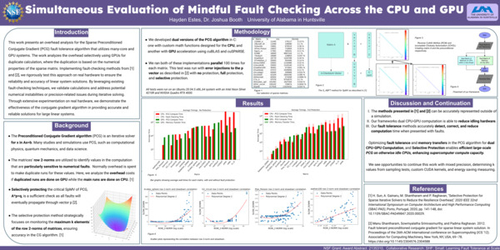

Simultaneous Evaluation of Mindful Fault Checking across the CPU and GPU

DescriptionThis work comprehensively analyzes the overhead when implementing fault-checking algorithms for sparse preconditioned conjugate gradient (PCG) solvers on many-core and GPU-accelerated systems. Our objective is to selectively utilize GPUs for duplicate calculations based on the numerical properties of the sparse matrices to enhance the reliability and performance of linear system solutions. Enabling the ability to rely on the relatively underutilized CPU for fault detection improves scientific applications' ability to efficiently manage their resources on large-scale systems. By leveraging existing fault-checking techniques, we validate calculations and address potential numerical instabilities or precision-related issues during iterative solving. Through extensive experimentation on real hardware, we demonstrate the effectiveness of the conjugate gradient algorithm in providing accurate and reliable solutions for large linear systems.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

ProxyStreams: Leveraging Lightweight Proxies for Portable Streams

DescriptionA novel streaming approach is introduced for Python, leveraging the ProxyStore system to facilitate the exchange of stream references across distributed systems. This approach utilizes generators to efficiently publish and consume messages from streams. The extensible backend connector interface of ProxyStore enables support for diverse communication mechanisms, such as transitioning from ZMQ to RDMA. Performance results highlight the capability to perform data PUT and GET operations on streams with minimal overhead and high efficiency.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

A Comparison of Deep and Shallow Residual Networks for Medical Imaging Classification

DescriptionThe complexity and parameters of mainstream large models are increasing rapidly. For example, the increasingly popular large language models (e.g., ChatGPT) have billions of parameters. While this has led to performance improvements, the performance gains for simple tasks may be unacceptable for the additional cost. We apply residual networks of three different depths and evaluate them extensively on the MedMNIST pneumonia dataset. Experimental results show that smaller models can achieve satisfactory performance at significantly lower costs than larger models.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

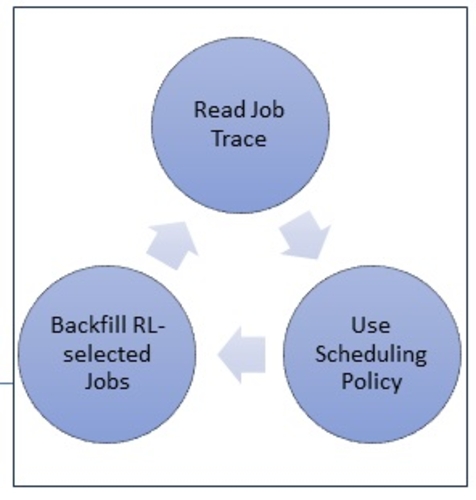

A Reinforcement Learning-Based Backfilling Strategy for HPC Batch Jobs

DescriptionHigh Performance Computing (HPC) systems are essential for various scientific fields, and effective job scheduling is crucial for their performance. Traditional backfilling techniques, such as EASY-backfilling, rely on user-submitted runtime estimates, which can be inaccurate and lead to suboptimal scheduling. This poster presents RL-Backfiller, a novel reinforcement learning (RL) based approach to improve HPC job scheduling. Our method incorporates RL to make better backfilling decisions, independent of user-submitted runtime estimates. We trained RL-Backfiller on the synthetic Lublin-256 workload and tested it on the real SDSC-SP2 1998 workload. We show how RLBackfilling can learn effective backfilling strategies and outperform traditional EASY-backfilling and other heuristic combinations via trial-and-error on existing job traces. Our evaluation results show up to 17x better scheduling performance (based on average bounded job slowdown) compared to EASY-backfilling

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

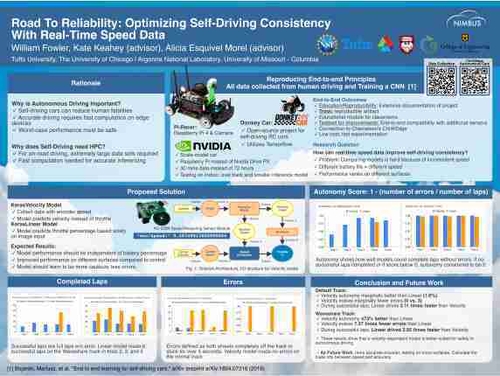

Road To Reliability: Optimizing Self-Driving Consistency With Real-Time Speed Data

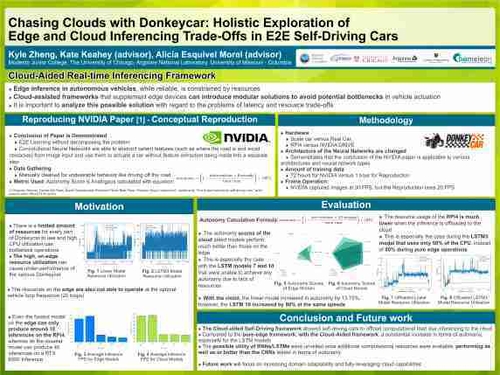

DescriptionSelf-driving cars can potentially improve transportation efficiency and reduce human fatalities – provided they have access to significant processing power and large amounts of data. One popular approach for actualizing autonomous vehicles is using end-to-end learning, in which a machine learning model is trained on a large data set of real human driving. This poster shows how self-driving consistency can be improved using a Convolutional Neural Network (CNN) to predict current velocity. Our approach first reproduces an end-to-end learning result and then extends it with real-time speed data as additional model input.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

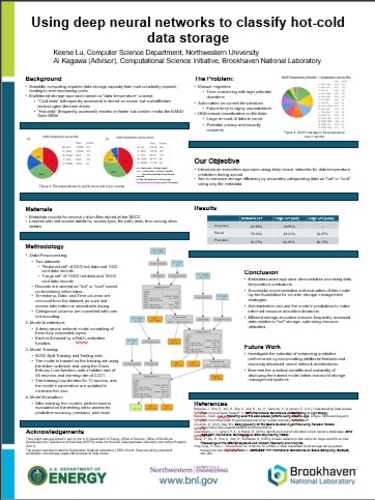

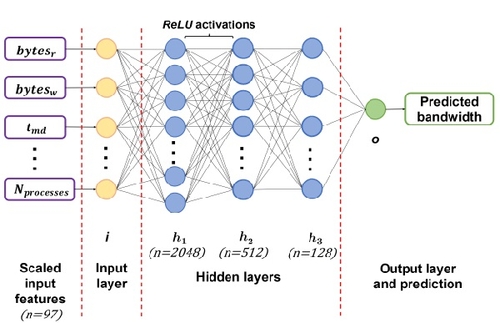

Using Deep Neural Networks to Classify Hot-Cold Data Storage

DescriptionThe Scientific Data and Computing Center (SDCC) at Brookhaven National Laboratory manages a data storage system with millions of files totaling petabytes of data. To optimize costs, they use a multi-tiered storage approach based on data temperature, storing infrequently accessed ("cold") data on cheaper technologies like Blu-ray disks or tape drives, and frequently accessed ("hot") data on faster but costlier mediums like Hard Disk Drives or Solid State Drives. Current data migration decisions rely on manual human judgment supported by simple algorithms not suitable for long-term predictions. To address this, our project aims to automate the process by training a deep neural network (DNN) on file metadata to predict data temperature upon upload. The model achieved promising initial results, with a 90.53% general accuracy in predicting data temperature. This automation could significantly improve the management and distribution of the vast research data generated at BNL.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

Scaling Studies for Efficient Parameter Search and Parallelism for Large Language Model Pretraining

DescriptionAI accelerator processing and memory constraints largely dictate the scale in which machine learning workloads (training and inference) can be executed within a desirable time frame. Training a transformer-based model requires the utilization of HPC harnessed through inherent parallelism embedded in processor design, to deliberate modification of neural networks to increase concurrency during training and inference. Our model is the culmination of different performance tests seeking the ideal combination of frameworks and configurations for training a 13-billion-parameter translation model for foreign languages. We performed ETL over the corpus, which involved training a balanced interleaved dataset. We investigated the impact of batch size, learning rate, and different forms of precision on model training time, accuracy, and memory consumption. We use DeepSpeed stage 3 and Huggingface accelerate to parallelize our model. Our model, based on the mT5 architecture, is trained on the mC4 and language-specific datasets, enabling question-answering in the fine-tuning process.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

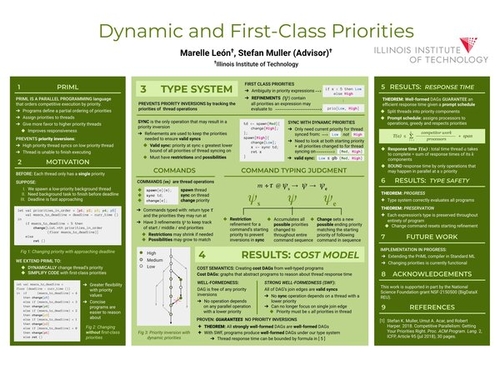

Dynamic and First-Class Priorities

DescriptionInteractive parallel programs have varying responsiveness requirements for tasks of differing urgency, which has been met with the solution of thread priorities to determine the tasks' allocation of processor time. Previous priority-based language models limit the span of entire threads to a single priority. Given an approaching real-time deadline, tasks are unable to shift to a higher priority in order to match the changing requirements. We design a type system that enforces thread priorities and allows dynamic prioritization, treating priorities as first-class to reduce code complexity. We create a dependency graph-based cost model for our system and define strong well-formedness to exclude unwanted priority inversions. We then prove that programs under our type system produce strongly well-formed graphs.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

How Much Noise Is Enough: On Privacy, Security, and Accuracy Trade-Offs in Differentially Private Federated Learning

DescriptionCentralized machine learning techniques have caused privacy concerns for users. Federated Learning~(FL) mitigates this as a decentralized training system where no raw data are communicated across the network to a centralized server. Instead, the machine learning model is trained locally on each device and they send the locally-trained model weights to a central server to aggregate. However, there are critical challenges with FL. Security issues plague FL, such as model poisoning via label flipping. Additionally, there even exist privacy concerns via data leakage by reconstruction of weights. In this work, we apply differential privacy (which adds noise to the model weights before sending across the network) as an added privacy measure to protect sensitive data from being reconstructed. Through this research, we study the effects of differential privacy on FL with respect to security and privacy trade-offs.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

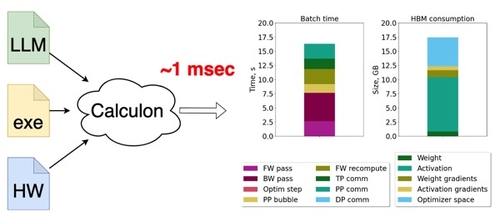

Scaling Infrastructure to Support Multi-Trillion Parameter LLM Training

DescriptionThis poster discusses efficient system designs for Large Language Model (LLM) scaling to up to 128 trillion parameters. We use a comprehensive analytical performance model to analyze how such models could be trained on current systems while maintaining 75% Model FLOPS Utilization (MFU). We first show how tensor offloading alone can be used to dramatically increase the size of trainable LLMs. We analyze performance bottlenecks when scaling on systems up to 16,384 GPUs and with models up to 128T parameters. Our findings suggest that current H100 GPUs with 80 GiB of HBM enabled with 512 GiB of tensor offloading capacity allows scaling to 11T-parameter LLMs; and getting to 128T parameters requires 120 GiB of HBM and 2 TiB of offloading memory, yielding 75%+ MFU, which is uncommon even when training much smaller LLMs today.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

Lossy and Lossless Compression for BioFilm Optical Coherence Tomography (OCT)

DescriptionOptical Coherence Tomography (OCT) can be used as a fast and non-destructive technology for bacterial biofilm imaging. However, OCT generates approximately 100 GB per flow cell, which complicates storage and data sharing. Data reduction can reduce data complications by reducing the overhead and the amount of data transferred. This work leverages the similarities between layers of OCT images to minimize the data in order to improve compression. This paper evaluates the 5 lossless and 2 lossy state-of-the-art compressors to reduce the OCT data. The reduction techniques are evaluated to determine which compressor has the most significant compression ratio while maintaining a strong bandwidth and minimal image distortion. Results show that SZ with frame before pre-processing is able to achieve the highest CR of 204.6x on its higher error bounds. The maximum compression bandwidth SZ on higher error bounds is ~41MB/s, for decompression bandwidth, it is able to outperform ZFP achieving.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

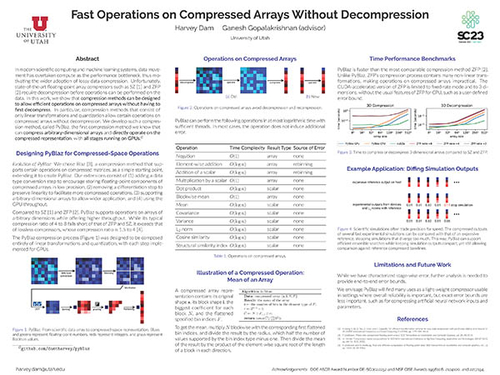

Fast Operations on Compressed Arrays without Decompression

DescriptionIn modern scientific computing and machine learning systems, data movement has overtaken compute as the performance bottleneck, thus motivating the wider adoption of lossy data compression. Unfortunately, state-of-the-art floating-point array compressors such as SZ and ZFP require decompression before operations can be performed on the data. In this work, our contribution is to show that compression methods can be designed to allow efficient operations on compressed arrays without having to first decompress. In particular, compression methods that consist of only linear transformations and quantization allow certain operations on compressed arrays without decompression. We develop such a compression method, called PyBlaz, the first compression method we know that can compress arbitrary-dimensional arrays and directly operate on the compressed representation, with all stages running on GPUs.

In the poster session, I will provide details about each compression step, several compressed-spaced operations, and our ongoing performance and application experiments.

In the poster session, I will provide details about each compression step, several compressed-spaced operations, and our ongoing performance and application experiments.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

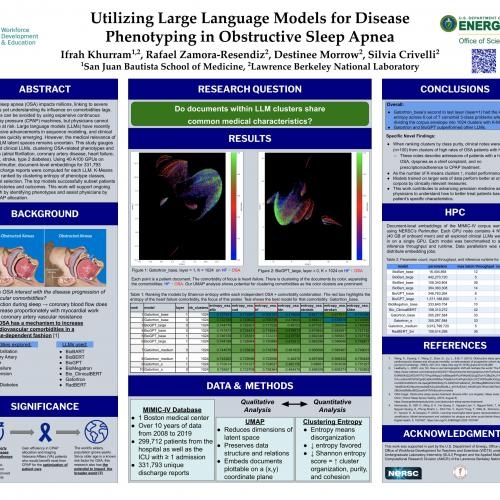

Utilizing Large Language Models for Disease Phenotyping in Obstructive Sleep Apnea

DescriptionObstructive sleep apnea (OSA) impacts millions, linking to severe complications yet understanding its influence on comorbidities lags. Complications can be avoided by using expensive continuous positive airway pressure (CPAP) machines, but physicians cannot identify those at risk. Large language models (LLMs) have recently made impressive advancements in sequence modeling, and clinical applications are quickly emerging. However, the medical relevance of pre-trained LLM latent spaces remains uncertain.

This study gauges 12 pre-trained clinical LLMs, clustering OSA-related phenotypes and comorbidities (atrial fibrillation, coronary artery disease, heart failure, hypertension, stroke, type 2 diabetes). Using 40 A100 GPUs on NERSC’s Perlmutter, document-level embeddings for 331,793 MIMIC-IV discharge reports were computed for each LLM. K-Means models were ranked by clustering entropy of phenotype classes, guiding model selection. The top models successfully subset patients with similar histories and outcomes. This work will support ongoing OSA research by identifying phenotypes and assist physicians by informing CPAP allocation.

This study gauges 12 pre-trained clinical LLMs, clustering OSA-related phenotypes and comorbidities (atrial fibrillation, coronary artery disease, heart failure, hypertension, stroke, type 2 diabetes). Using 40 A100 GPUs on NERSC’s Perlmutter, document-level embeddings for 331,793 MIMIC-IV discharge reports were computed for each LLM. K-Means models were ranked by clustering entropy of phenotype classes, guiding model selection. The top models successfully subset patients with similar histories and outcomes. This work will support ongoing OSA research by identifying phenotypes and assist physicians by informing CPAP allocation.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

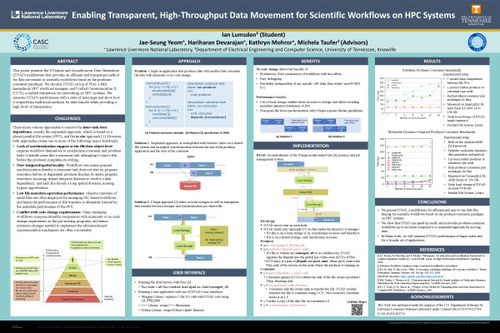

Enabling Transparent, High-Throughput Data Movement for Scientific Workflows on HPC Systems

DescriptionThis poster presents the DYnamic and Asynchronous Data Streamliner (DYAD) middleware that provides an efficient and transparent method for data movement in scientific workflows based on the producer-consumer paradigm. We develop DYAD on top of Flux, a fully hierarchical HPC workload manager, and Unified Communication X (UCX), a unified framework for networking on HPC systems. We measure DYAD's performance with a suite of mini-apps and show how it outperforms traditional methods for data transfer while providing a higher level of transparency.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

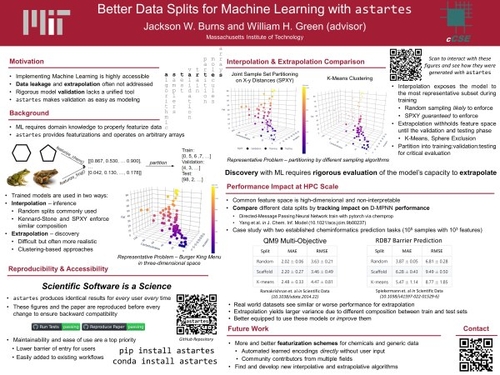

Better Data Splits for Machine Learning with Astartes

DescriptionMachine Learning (ML) has become an increasingly popular tool to accelerate traditional workflows. Critical to the use of ML is the process of splitting datasets into training, validation, and testing subsets to develop and evaluate models. Common practice is to assign these subsets randomly. Although this approach is fast, it only measures a model's capacity to interpolate. These testing errors may be overly optimistic on out-of-scope data; thus, there is a growing need to easily measure performance for extrapolation tasks. To address this issue, we report astartes, an open-source Python package that implements many similarity- and distance-based algorithms to partition data into more challenging splits. This poster focuses on use-cases within cheminformatics. However, astartes operates on arbitrary vectors, so its principals and workflow are generalizable to other ML domains as well. astartes is available via the Python package managers pip and conda and is publicly hosted on GitHub (github.com/JacksonBurns/astartes).

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

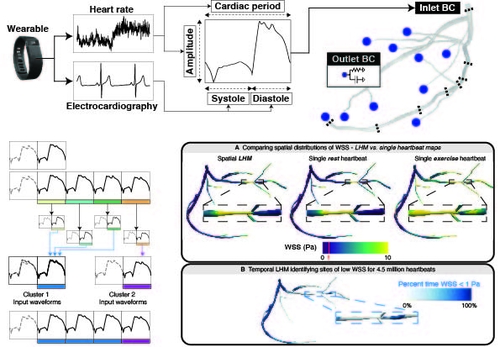

Cloud Computing at Scale: Tracking 4.5 Million Heartbeats of 3D Coronary Flow via the Longitudinal Hemodynamic Mapping Framework

DescriptionTracking hemodynamic responses to treatment and stimuli for long periods is a grand challenge. Moving from established single-heartbeat technology to longitudinal profiles would require continuous data reflecting a patient's evolving state, methods to extend the temporal domain that could be feasibly computed, and high-throughput resources. Although personalized models can accurately measure 3D hemodynamics over single heartbeats, state-of-the-art methods would require centuries of runtime on leadership-class systems to simulate one day of activity. We are establishing the Longitudinal Hemodynamic Mapping Framework (LHMF), which combines patient-specific models, wearables, and cloud computing to enable the first digital twins that capture longitudinal hemodynamic maps (LHMs). We demonstrate validity through comparison with ground truth data for 750 beats. We applied LHMF to generate the first LHM of coronary arteries spanning 4.5 million heartbeats. LHMF relies on an initial fixed set of representative simulations to enable the computationally tractable creation of LHM over heterogeneous systems.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

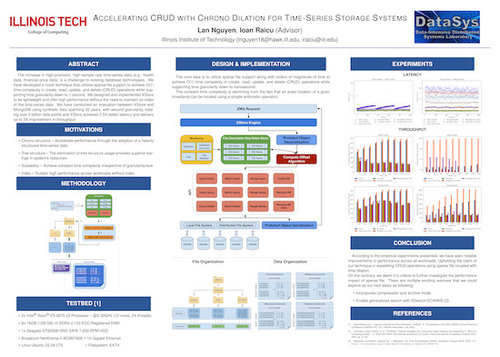

Accelerating CRUD with Chrono Dilation for Time-Series Storage Systems

DescriptionIn recent years, we have seen an un-precedented growth of data in our daily lives ranging from health data from an Apple Watch, financial stock price data, volatile crypto-currency data, to diagnostic data of nuclear/rocket simulations. The increase in high-precision, high-sample-rate time-series data is a challenge to existing database technologies. We have developed a novel technique that utilizes sparse-file support to achieve O(1) time complexity in create, read, update, and delete (CRUD) operations while supporting time granularity down to 1-second. We designed and implemented XStore to be lightweight and offer high performance without the need to maintain an index of the time-series data. We have conducted a detailed evaluation between XStore and existing best-of-breed systems such as MongoDB using synthetic data spanning 20 years, with second granularity, totaling over 5 billion datapoints. Through empirical experiments against MongoDB, XStore achieves 2.5X better latency and delivers up to 3X improvement in throughput.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

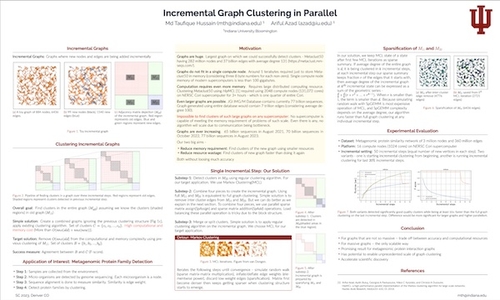

Incremental Graph Clustering in Parallel

DescriptionWe develop a distributed memory graph clustering algorithm to find clusters in a graph where new nodes and edges are being added incrementally. At each stage of the algorithm, we maintain a summary of the clustered graph computed from all incremental batches received thus far. As we receive a new batch of nodes and edges, we cluster the new graph and merge new clusters with the previous summary clusters. We use sparse linear algebra to perform these operations. Our algorithm would make it possible to find clusters in very large graphs for which regular graph clustering algorithms could not run due to computation/communication bottlenecks.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

NetCDFaster: A Geospatial Cyberinfrastructure for Multi-Dimensional Scientific Datasets Full-Stack I/O and Visualization

DescriptionNetCDF's original design included a portable file format and an intuitive application programming interface (API). However, the current NetCDF framework and its derived libraries lack efficient support for querying and visualizing data subsets with low memory use and time cost. Therefore, a full-stack solution to handle and display multidimensional data frames in NetCDF must be developed to meet the research needs. In this project, a next-generation full-stack tool, “NetCDFaster,” was developed to accelerate the reading and viewing of NetCDF data. This tool was derived from serial and parallel interfaces built on MPI-IO. The test results showed that processing time and memory usage were significantly improved than conventional methods.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

A Heterogeneous, In Transit Approach for Large Scale Cellular Modeling

DescriptionThe field of in silico cellular modeling has made notable strides in number of cells that can be simultaneously modeled. While computational capabilities have grown exponentially, I/O performance has lagged behind. To address this issue, we present an in-transit approach to enable in situ visualization and analysis of large-scale fluid-structure-interaction models on leadership-class systems. We delineate the proposed framework and demonstrate the feasibility of this approach by measuring overhead introduced. The proposed framework provides a valuable tool for both at-scale debugging and enabling scientific discovery, which would be difficult to achieve otherwise.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

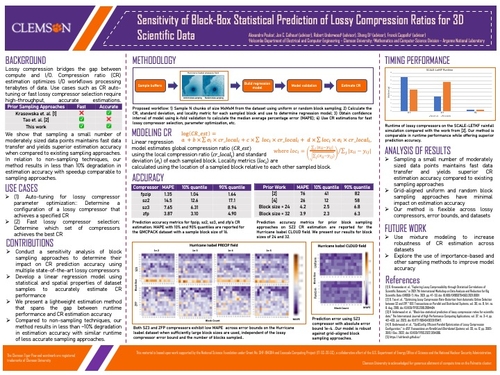

Sensitivity of Black-Box Statistical Prediction of Lossy Compression Ratios for 3D Scientific Data

DescriptionCompression ratio estimation is an important optimization of I/O workflows processing terabytes of data. Applications such as compression auto-tuning or lossy compressor selection require a high-throughput, accurate estimation. Prior works that utilize sampling are fast but inaccurate, while approaches which do not use sampling are highly accurate but slow. Through sensitivity analysis we show that sampling a small number of moderately sized data blocks maintains fast data transfer and yields superior estimation accuracy compared to existing sampling approaches, and we use this to construct a new fast and accurate sampling method. In relation to non-sampling techniques, our method results in less than 10% degradation in estimation accuracy, while still maintaining the high throughput of the less accurate sampling methods.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

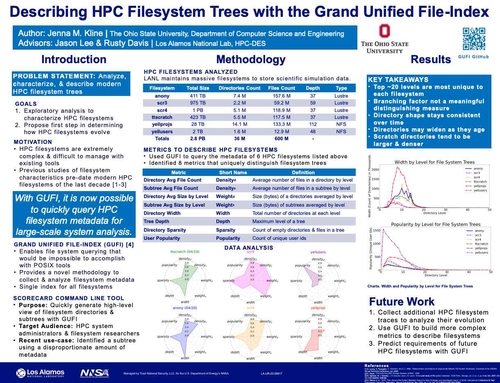

Seeing the Trees for the Forest: Describing HPC Filesystem Trees with the Grand Unified File-Index (GUFI)

DescriptionHigh performance computing (HPC) filesystems are extremely large, complex, and difficult to manage with existing tools. It is challenging for HPC administrators to describe the current structure of their filesystems, predict how they will change over time, and the requirements for future filesystems as they continue to evolve. Previous studies of filesystem characteristics largely predate the modern HPC filesystems of the last decade. The Grand Unified File Index (GUFI) was used to collect the data used to compute the characteristics of six HPC filesystems indexes from Los Alamos National Laboratory (LANL) representing 2.8 PB of data, containing 36 million directories and 600 million files. We present a methodology using GUFI to characterize the shape of HPC filesystems to help system administrators to understand their key characteristics.

This document has been approved for public release under LA-UR-23-28958.

This document has been approved for public release under LA-UR-23-28958.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

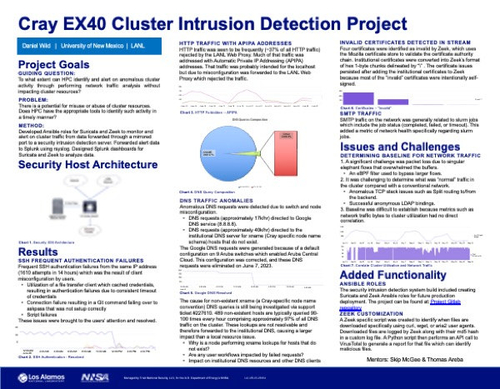

Cray EX40 Cluster Intrusion Detection System

DescriptionAnalysis of a High-Performance Computing cluster’s external network traffic provides the opportunity to identify security issues, cluster misuse, or configuration problems without reducing performance. This project captured the external network traffic to and from a Cray EX40 cluster over three months and analyzed it utilizing two open-source intrusion detection tools, Suricata and Zeek. The tool alerts were sent to Splunk via rsyslog for parsing and analysis. Several security concerns were identified, including excessive failed authentication attempts and the use of four invalid certificates. Multiple cluster configuration issues were also identified, including recurrent anomalous Domain Name Service (DNS) queries which comprised 97% of all DNS traffic and incorrectly routed outbound Hypertext Transfer Protocol traffic. The port mirror architecture combined with network intrusion detection tools offered valuable insight into security concerns and several configuration issues. Excessive failed authentication attempts and a switch DNS configuration issue were both resolved by this project.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

Job Level Communication-Avoiding Detection and Correction of Silent Data Corruption in HPC Applications

DescriptionDetecting and correcting Silent Data Corruption (SDC) is of high interest for many HPC applications due to the dramatic consequences such undetected computation errors can have. Additionally, going into the exascale era of computing, SDC error rates are only increasing with growing system sizes. State of the art methods based on instruction duplication suffer from only partial error coverage, significant synchronization overhead and strong coupling of computation and validation.

This work proposes a novel communication-avoiding approach of detecting and mitigating SDCs at the job level within the workload manager, assuming a directed acyclic graph (DAG) job model. Each job only communicates a locally generated output data hash. Computation and validation are decoupled as separately schedulable jobs and dependency stalling is avoided with a special error recovery method. The implementation of this project within the SLURM workload manager is in progress and key design aspects are outlined.

This work proposes a novel communication-avoiding approach of detecting and mitigating SDCs at the job level within the workload manager, assuming a directed acyclic graph (DAG) job model. Each job only communicates a locally generated output data hash. Computation and validation are decoupled as separately schedulable jobs and dependency stalling is avoided with a special error recovery method. The implementation of this project within the SLURM workload manager is in progress and key design aspects are outlined.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

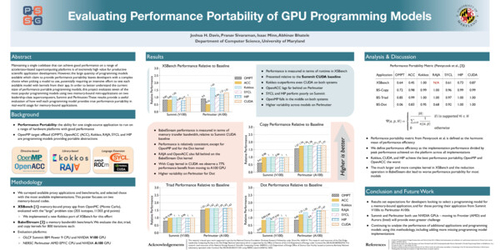

Case Study for Performance Portability of GPU Programming Frameworks for Hemodynamic Simulations

DescriptionPreparing for the deployment of large scientific and engineering codes on GPU-dense exascale systems is made challenging by the unprecedented diversity of vendor hardware and programming model alternatives for offload acceleration. To leverage the exaflops of GPUs from Frontier (AMD) and Aurora (Intel), users of high performance computing (HPC) legacy codes originally written to target NVIDIA GPUs will have to make decisions with implications regarding porting effort, performance, and code maintainability. To facilitate HPC users navigating this space, we have established a pipeline that combines generalized GPU performance models with proxy applications to evaluate the performance portability of a massively parallel computational fluid dynamics (CFD) code in CUDA, SYCL, HIP, and Kokkos with backends on current NVIDIA-based machines as well as testbeds for Aurora (Intel) and Frontier (AMD). We demonstrate the utility of predictive models and proxy applications in gauging performance bounds and guiding hand-tuning efforts.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

Fast Checkpointing of Large Language Models with TensorStore CHFS

DescriptionThe frequency of checkpoint creation in large language models is limited by the write bandwidth to a parallel file system. In this study, we aim to reduce the checkpoint creation time by writing to the Intel Optane Persistent Memory installed on the compute nodes.

We propose TensorStore CHFS, a storage driver that adds an ad hoc parallel file system CHFS to the TensorStore. The proposed method succeeded in increasing the checkpoint creation bandwidth of the T5 1.1 model by 4.5 times on 32 nodes.

We propose TensorStore CHFS, a storage driver that adds an ad hoc parallel file system CHFS to the TensorStore. The proposed method succeeded in increasing the checkpoint creation bandwidth of the T5 1.1 model by 4.5 times on 32 nodes.

Event Type

ACM Student Research Competition: Graduate Poster

ACM Student Research Competition: Undergraduate Poster

Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

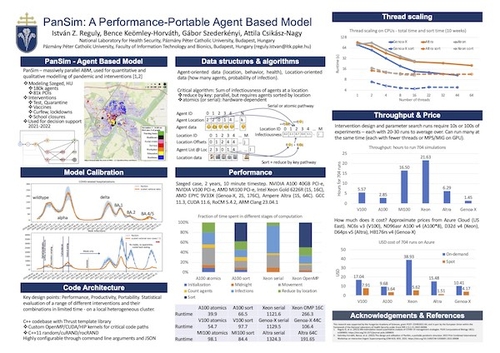

PanSim: A Performance-Portable Agent Based Model

SessionResearch Posters Display

DescriptionPanSim, a specialized agent-based model, was developed to analyze interventions against COVID-19. Implemented in C++ and Thrust, it is a highly performant and portable code. Here we focus on different algorithmic formulations for calculating cumulative values like infectiousness at different locations. A detailed comparison of time and efficiency on different CPUs and GPUs was conducted, revealing suboptimal parallel efficiency. The time to execute 704 simulations on each platform was evaluated, emphasizing overall throughput instead of latency for more taxing workloads. We benchmarked modern CPU and GPU architectures, revealing the superior performance of NVIDIA A100 and AMD Genoa-X platforms. Additionally, the monetary cost associated with executing the simulations was analyzed, presenting a contrasting landscape in on-demand and spot pricing. Ampere Altra platform emerged as the most cost-effective. The findings contribute to understanding the efficiency, time, and cost dynamics in modeling and provide insights for the practice of pandemic response planning.

Event Type

Posters

Research Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

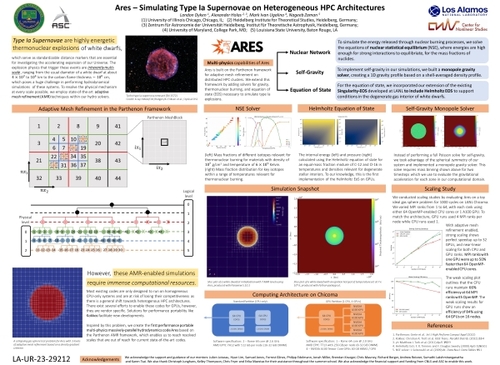

Ares – Simulating Type Ia Supernovae on Heterogeneous HPC Architectures

SessionResearch Posters Display

DescriptionType Ia Supernovae are highly luminous thermonuclear explosions of white dwarfs which serve as standardizable distance markers for investigating the accelerating expansion of our Universe. Most existing supernovae simulation codes are only designed to run on homogeneous CPU-only systems and do not take advantage of the increasing shift towards heterogeneous architectures in HPC. To address this, we present Ares, the first performance portable massively-parallel code for simulating thermonuclear burn fronts. By creating multi-physics modules using the Kokkos and Parthenon frameworks, we are able to scale supernovae simulations to distributed HPC clusters operating on any of CUDA, HIP, SYCL, HPX, OpenMP and serial backends. We evaluate our application by conducting weak and strong scaling studies on both CPU and GPU clusters, showing the efficiency of our method for a diverse set of targets.

Event Type

Posters

Research Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

Balancing Latency and Throughput of Distributed Inference by Interleaved Parallelism

SessionResearch Posters Display

DescriptionDistributed large model inference is still in a dilemma where balancing latency and throughput, or rather cost and effect. Tensor parallelism, while capable of optimizing latency, entails a substantial expenditure. Conversely, pipeline parallelism excels in throughput but falls short in minimizing execution time.

To address this challenge, we introduce a novel solution - interleaved parallelism. This approach interleaves computation and communication across requests. Our proposed runtime system harnesses GPU scheduling techniques to facilitate the overlapping of communication and computation kernels, thereby enabling this pioneering parallelism for distributed large model inference. Extensive evaluations show that our proposal outperforms existing parallelism approaches across models and devices, presenting the best latency and throughput in most cases.

To address this challenge, we introduce a novel solution - interleaved parallelism. This approach interleaves computation and communication across requests. Our proposed runtime system harnesses GPU scheduling techniques to facilitate the overlapping of communication and computation kernels, thereby enabling this pioneering parallelism for distributed large model inference. Extensive evaluations show that our proposal outperforms existing parallelism approaches across models and devices, presenting the best latency and throughput in most cases.

Event Type

Posters

Research Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

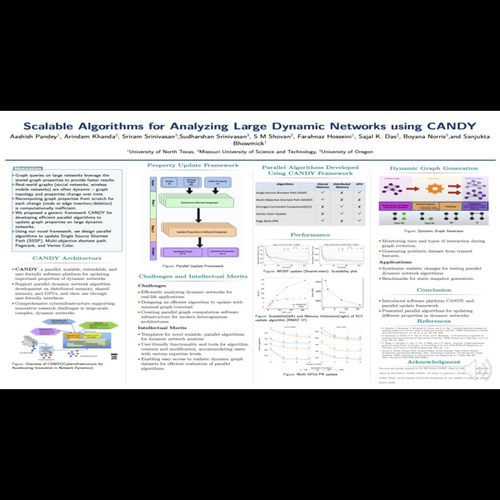

Scalable Algorithms for Analyzing Large Dynamic Networks Using CANDY

SessionResearch Posters Display

DescriptionAs the dynamic network’s topology undergoes temporal alterations, associated graph properties must be updated to ensure their ac- curacy. Addressing this requirement efficiently in large dynamic networks led to the proposal of a generic framework, CANDY (Cyberinfrastructure for Accelerating Innovation in Network Dynamics). This paper expounds on the development of algorithms and subsequent performance improvements facilitated by CANDY.

Event Type

Posters

Research Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

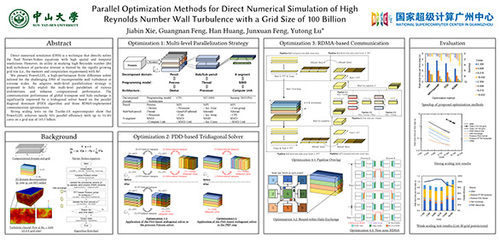

Parallel Optimization Methods for Direct Numerical Simulation of High Reynolds Number Wall Turbulence with a Grid Size of 100 Billion

SessionResearch Posters Display

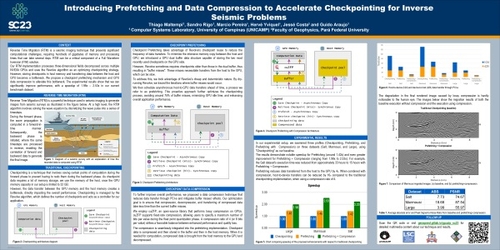

DescriptionDirect numerical simulation (DNS) is a technique that directly solves the fluid Navier-Stokes equations with high spatial and temporal resolutions. However, its utility in studying high Reynolds number (Re) wall turbulence of particular interest is limited by the rapidly growing grid size (i.e., the memory and computation requirement) with Re^3.

We present PowerLLEL, a high-performance finite difference solver tailored for the challenging DNS of incompressible wall turbulence at extreme scales. An adaptive multi-level parallelization strategy is proposed to fully exploit the multi-level parallelism of various architectures and enhance computational performance. The communication performance of global transpose and halo exchange is significantly improved by a tridiagonal solver based on the parallel diagonal dominant (PDD) algorithm and three RDMA-implemented communication optimizations. Strong scaling tests on the Tianhe-2A supercomputer show that PowerLLEL achieves nearly 92% parallel efficiency with up to 31,104 cores on a grid size of 143.3 billion.

We present PowerLLEL, a high-performance finite difference solver tailored for the challenging DNS of incompressible wall turbulence at extreme scales. An adaptive multi-level parallelization strategy is proposed to fully exploit the multi-level parallelism of various architectures and enhance computational performance. The communication performance of global transpose and halo exchange is significantly improved by a tridiagonal solver based on the parallel diagonal dominant (PDD) algorithm and three RDMA-implemented communication optimizations. Strong scaling tests on the Tianhe-2A supercomputer show that PowerLLEL achieves nearly 92% parallel efficiency with up to 31,104 cores on a grid size of 143.3 billion.

Event Type

Posters

Research Posters

TimeTuesday, 14 November 202310am - 5pm MST

LocationDEF Concourse

TP

XO/EX

Archive

view

Hide Details

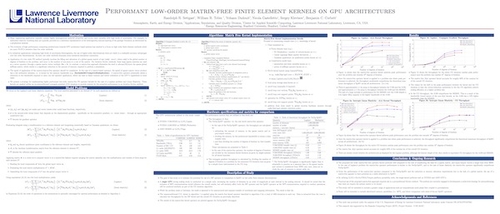

Performant Low-Order Matrix-Free Finite Element Kernels on GPE Architectures

SessionResearch Posters Display

DescriptionNumerical methods such as the Finite Element Method (FEM) have successfully leveraged the computational power of GPU accelerators. However, much of the effort around FEM on GPU’s has been focused on high order discretizations due to their higher arithmetic intensity and order of accuracy. For applications such as the simulation of geologic reservoirs, high levels of heterogeneity results in high-resolution grids characterized by highly discontinuous (cell-wise) material property fields. Additionally, the significant uncertainties typical of geologic reservoirs reduces the benefits of high order accuracy, and low order methods are typically employed. In this study, we present a strategy for implementing highly performant low-order matrix-free FEM operator kernels in the context of the conjugate gradient method. Performance results of the operator kernel are presented and are shown to compare favorably to matrix-based SpMV operators on V100, A100, and MI250X GPUs.

Event Type

Posters

Research Posters